Michael Berry

According to legend, Leo Szilard's baths were ruined by his conversion to biology. He had enjoyed soaking for hours while thinking about physics. But as a convert he found this pleasure punctuated by the frequent need to leap out and search for a fact. In physics—particularly theoretical physics—we can get by with a few basic principles without knowing many facts; that is why the subject attracts those of us cursed with poor memory.

But there is a corpus of mathematical information that we do need. Much of this consists of formulas for the "special" functions. How many of us remember the expansion

Then in 1964 came Abramowitz and Stegun's Handbook of Mathematical Functions (A&S),3 perhaps the most successful work of mathematical reference ever published. It has been on the desk of every theoretical physicist. Over the years, I have worn out three copies. Several years ago, I was invited to contemplate being marooned on the proverbial desert island. What book would I most wish to have there, in addition to the Bible and the complete works of Shakespeare? My immediate answer was: A&S. If I could substitute for the Bible, I would choose Gradsteyn and Ryzhik's Table of Integrals, Series and Products.4 Compounding the impiety, I would give up Shakespeare in favor of Prudnikov, Brychkov and Marichev's Integrals and Series.5 On the island, there would be much time to think about physics and much physics to think about: waves on the water that carve ridges on the sand beneath and focus sunlight there; shapes of clouds; subtle tints in the sky... With the arrogance that keeps us theorists going, I harbor the delusion that it would be not too difficult to guess the underlying physics and formulate the governing equations. It is when contemplating how to solve these equations—to convert formulations into explanations—that humility sets in. Then, compendia of formulas become indispensable.

Nowadays the emphasis is shifting away from books towards computers. With a few keystrokes, the expansion

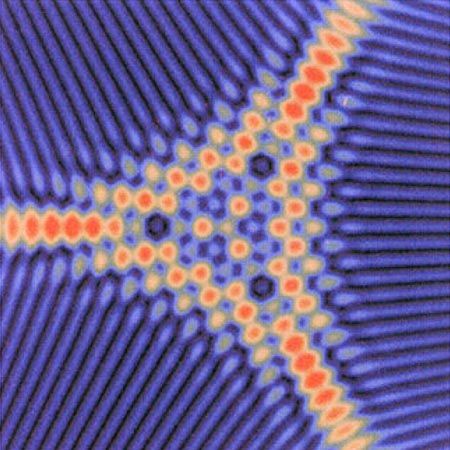

The DLMF will reflect a substantial increase in our knowledge of special functions since 1964, and will also include new families of functions. Some of these functions were (with one class of exceptions) known to mathematicians in 1964, but they were not well known to scientists, and had rarely been applied in physics. They are new in the sense that, in the years since 1964, they have been found useful in several branches of physics. For example, string theory and quantum chaology now make use of automorphic functions and zeta functions; in the theory of solitons and integrable dynamical systems, Painlevé transcendents are widely employed; and in optics and quantum mechanics, a central role is played by "diffraction catastrophe" integrals, generated by the polynomials of singularity theory—my own favorite, and the subject of a chapter I am writing with Christopher Howls for the DLMF.

This continuing and indeed increasing reliance on special functions is a surprising development in the sociology of our profession. One of the principal applications of these functions was in the compact expression of approximations to physical problems for which explicit analytical solutions could not be found. But since the 1960s, when scientific computing became widespread, direct and "exact" numerical solution of the equations of physics has become available in many cases. It was often claimed that this would make the special functions redundant. Similar skepticism came from some pure mathematicians, whose ignorance about special functions, and lack of interest in them, was almost total. I remember that when singularity theory was being applied to optics in the 1970s, and I was seeking a graduate student to pursue these investigations, a mathematician recommended somebody as being very bright, very knowledgeable, and interested in applications. But this student had never heard of Bessel functions (nor could he carry out the simplest integrations, but that is another story).

The persistence of special functions is puzzling as well as surprising. What are they, other than just names for mathematical objects that are useful only in situations of contrived simplicity? Why are we so pleased when a complicated calculation "comes out" as a Bessel function, or a Laguerre polynomial? What determines which functions are "special"? These are slippery and subtle questions to which I do not have clear answers. Instead, I offer the following observations.

There are mathematical theories in which some classes of special functions appear naturally. A familiar classification is by increasing complexity, starting with polynomials and algebraic functions and progressing through the "elementary" or "lower" transcendental functions (logarithms, exponentials, sines and cosines,

One reason for the continuing popularity of special functions could be that they enshrine sets of recognizable and communicable patterns and so constitute a common currency. Compilations like A&S and the DLMF assist the process of standardization, much as a dictionary enshrines the words in common use at a given time. Formal grammar, while interesting for its own sake, is rarely useful to those who use natural language to communicate. Arguing by analogy, I wonder if that is why the formal classifications of special functions have not proved very useful in applications.

Sometimes the patterns embodying special functions are conjured up in the form of pictures. I wonder how useful sines and cosines would be without the images, which we all share, of how they oscillate. In 1960, the publication in J&E of a

"New" is important here. Just as new words come into the language, so the set of special functions increases. The increase is driven by more sophisticated applications, and by new technology that enables more functions to be depicted in forms that can be readily assimilated.

Sometimes the patterns are associated with the asymptotic behavior of the functions, or of their singularities. Of the two Airy functions, Ai is the one that decays towards infinity, while Bi grows;

Perhaps standardization is simply a matter of establishing uniformity of definition and notation. Although simple, this is far from trivial. To emphasize the importance of notation, Robert Dingle in his graduate lectures in theoretical physics at the University of St. Andrews in Scotland would occasionally replace the letters representing variables by nameless invented squiggles, thereby inducing instant incomprehensibility. Extending this one level higher, to the names of functions, just imagine how much confusion the physicist John Doe would cause if he insisted on replacing

To paraphrase an aphorism attributed to the biochemist Albert Szent-Györgyi, perhaps special functions provide an economical and shared culture analogous to books: places to keep our knowledge in, so that we can use our heads for better things.

| 1. | E. Jahnke, F. Emde, Tables of Functions with Formulae and Curves, Dover Publications, New York (1945). |

| 2. | A. Erdélyi, W. Magnus, F. Oberhettinger, F. G. Tricomi, Higher Transcendental Functions, 5 vols., Krieger Publishing, Melbourne, Fla. (1981) [first published in 1953]. |

| 3. | M. Abramowitz, I. A. Stegun, eds., Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables, National Bureau of Standards Applied Mathematics Series, vol. 55, US Government Printing Office, Washington, DC (1964). |

| 4. | I. S. Gradsteyn, I. M. Ryzhik, Table of Integrals, Series, and Products, 6th ed. (translated from Russian by Scripta Technika), Academic Press, New York (2000) [first published in 1965]. |

| 5. | A. P. Prudnikov, Yu. A. Brychkov, O. I. Marichev, Integrals and Series, 5 vols. (translated from Russian by N. M. Queen), Gordon and Breach, New York |

Biting into an apple and finding a maggot is unpleasant enough, but finding half a maggot is worse. Discovering

In physics, limits abound and are fundamental in the passage between descriptions of nature at different levels. The classical world is the limit of the quantum world when Planck's

The coherence of our physical worldview requires the reassurance that, singularities notwithstanding, quantum mechanics does reduce to classical mechanics, statistical mechanics does reduce to thermodynamics, and so on, in the appropriate limits. We know that when calculating the orbit of a spacecraft (and indeed knowing that it has an orbit) we can safely use classical mechanics, rather than having to solve the Schrödinger equation. An engineer designing a bridge can rely on continuum elasticity theory, without needing to know the atomic arrangements underlying the equation of state of the materials used in the construction. However, getting these reassurances from fundamental theory can involve subtle and unexpected concepts.

Perhaps the simplest example is two flashlights shining on a wall. Their combined light is twice as bright as when each shines separately: This is the optical embodiment of the equation

Young's "demonstrable" invisibility requires an additional concept, later made precise by Augustin Jean Fresnel and Lord Rayleigh: The rapidly varying cos 2φ must be replaced by its average value, namely zero, reflecting the finite resolution of the detectors, the fact that the light beam is not monochromatic, and the rapid phase variations in the uncoordinated light from the two flashlights. Only then does

Nowadays this application of the idea that the average of a cosine is zero, elaborated and reincarnated, is called decoherence. This might seem a bombastic redescription of the commonplace, but the applications of decoherence are far from trivial. Decoherence quantifies the uncontrolled extraneous influences that could upset the delicate superpositions in quantum computers. And, as we have learned from the work of Wojciech Zurek and others, the same concept governs the emergence of the classical from the quantum world in situations more sophisticated than Young's, where chaos is involved. For example, the chaotic tumbling of Saturn's satellite Hyperion, regarded as a quantum rotator with about

Other reassurances are equally hard to come by. For example, formally obtaining thermodynamics from statistical mechanics involves applying the mathematical

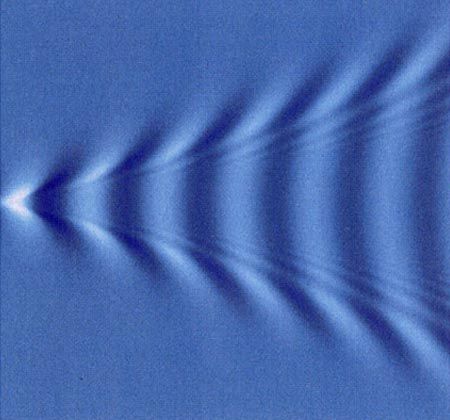

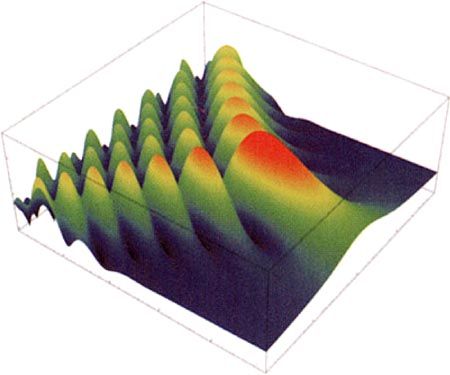

In quantum mechanics (and indeed the physics of waves of all kinds), a range of new phenomena lurk in the borderland with classical mechanics.

| |||

Across the boundary between classically allowed and forbidden regions in a |

New ideas in physics often inspire, or are inspired by, new ideas in mathematics, and singular limits are no exception. Underlying critical phenomena is the renormalization group, which determines how systems transform, or remain invariant, under changes of scale—a fertile idea that is essentially mathematical but whose foundations have not been rigorously established. The quantum-classical connection involves divergent infinite series (for example, in powers

Singular limits carry a clear message, which philosophers are beginning to hear:3 The physics of singular limits is the natural philosophy of renormalization and divergent series. Perhaps they are recognizing that some problems of theory reduction can themselves be reduced to tricky questions in mathematical asymptotics an extension of the traditional philosophical method, of argumentation based on words. Usually, we think of "applications" of science going from the more general to the more specific—physics to widgets—but this is an application that goes the other way: from physics to philosophy. One wonders if it counts with those journalists or administrators who like to question whether our research has applications. Probably not.

| 1. | M. V. Berry, in Quantum Mechanics: Scientific Perspectives on Divine Action, R. J. Russell, P. Clayton, K. Wegter-McNelly, J. Polkinghorne, eds., Vatican Observatory Publications, Vatican City State, and The Center for Theology and the Natural Sciences, Berkeley, Calif., (2001), p. 41. |

| 2. | P. W. Anderson, Science 177, 393 (1972). |

| 3. | R. W. Batterman, The Devil in the Details: Asymptotic Reasoning in Explanation, Reduction, and Emergence, Oxford University Press, New York (2002). |

|

Michael Berry is Royal Society Research Professor in the physics department of Bristol University, UK, and studies physical asymptotics. |